A Review of The Impact of Deep Learning on the Analysis of Cosmological Galaxy Surveys

slides at

eiffl.github.io/talks/CoPhy2023

The Deep Learning Boom in Astrophysics

astro-ph abstracts mentioning Deep Learning,

CNN, or Neural Networks

Will AI Revolutionize the Scientific Analysis of Cosmological Surveys?

Review of the impact of Deep Learning in Galaxy Survey Science

https://ml4astro.github.io/dawes-review

outline of this talk

My goal for today: tour of Deep Learning applications relevant to cosmological physical inference.

- Interpreting Increasingly Complex Images

- Accelerating Numerical Simulations

- Simulation-Based Cosmological Inference

Interpreting Increasingly Complex Data

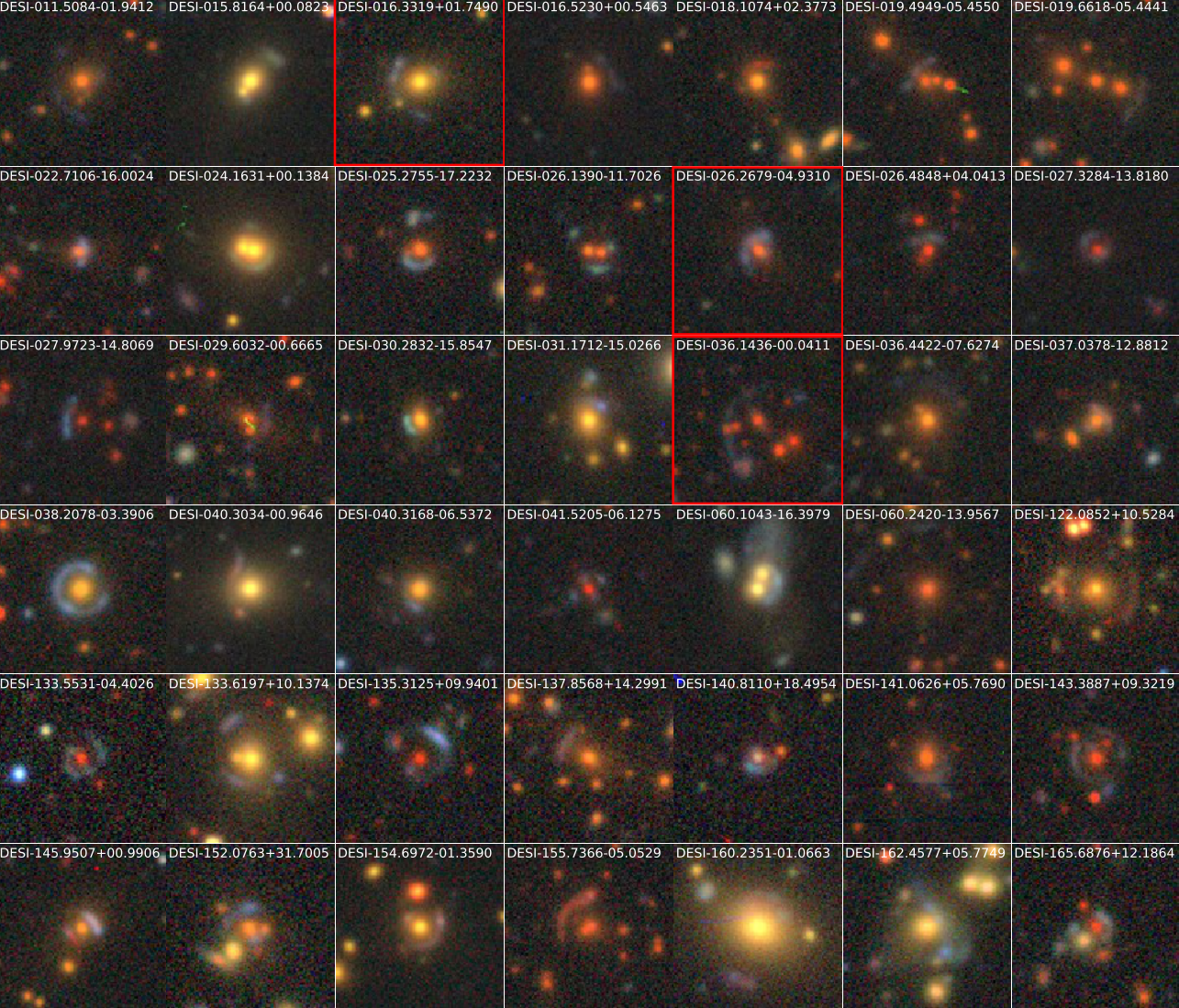

Dieleman+15, Huertas-Company+15, Aniyan+17, Charnock+17, Gieseke+17, Jacobs+17, Petrillo+17, Schawinski+17, Alhassan+18, Dominguez-Sanchez+18, George+18, Hinners+18, Lukic+18, Moss+18, Razzano+18, Schaefer+18, Allen+19, Burke+19, Carrasco-Davis+19, Chatterjee+19, Davies+19, Dominguez-Sanchez+19, Fusse+19, Glaser+19, Ishida+19, Jacobs+19, Katebi+19, Lanusse+19Liu+19, Lukic+19, Metcalf+19, Muthukrishna+19, Petrillo+19, Reiman+19, Boucaud+20, Chiani+20, Ghosh+20, Gomez+20, Hausen+20, Hlozek+20, Hosseinzadeh+20. Huang+20, Li+20, Moller+20, Paillassa+20, Tadaki+20, Vargas dos Santos+20, Walmsley+20, Wei+20, Allam+21, Arcelin+21, Becker+21, Bretonniere+21, Burhanudin+21, Davison+21, Donoso-Oliva+21, Jia+21, Lauritsen+21, Ono+21, Ruan+21, Sadegho+21, Tang+21, Tanoglidis+21, Vojtekova+21, Dhar+22Hausen+22, Orwat+22, Pimentel+22, Rezaei+22, Samudre+22, Shen+22, Walmsley+22

Credit: NAOJ

Detection and Classification of Astronomical Objects

Credit: NAOJ

A perfect task for Deep Learning!

Inference at the Image Level

takeways

Is Deep Learning Really Changing the Game in Interpreting Survey

Data?

-

For Detection, Classification, Cleaning tasks:

Yes!

$\Longrightarrow$ As long as we don't need to understand precisely the model response/selection function. -

For infering physical properties needed in downstream

analysis: Not really...

- In general the exact response is not known, and very non-linear.

- Not addressing the core question of representativeness of training data.

Accelerating Cosmological Simulations with Deep Learning

Rodriguez+19, Modi+18, Berger+18, He+18, Zhang+19, Troster+19, Zamudio- Fernandez+19, Perraudin+19, Charnock+19, List+19, Giusarma+19, Bernardini+19, Chardin+19, Mustafa+19, Ramanah+20, Tamosiunas+20, Feder+20, Moster+20, Thiele+20, Wadekar+20, Dai+20, Li+20, Lucie-Smith+20, Kasmanoff+20, Ni+21, Rouhiainen+21, Harrington+21, Horowitz+21, Horowitz+21, Bernardini+21, Schaurecker+21, Etezad-Razavi+21, Curtis+21

AI-assisted superresolution cosmological simulations

x8 super-resolution

- Inputs low resolution particle displacement field, outputs samples from a distribution $p_\theta(x_{SR} | x_{LR})$

Fast, high-fidelity Lyman α forests with CNNs

are these Deep Learning models a real game-changer?

The Limitations of Black-Box Large Deep Learning Approaches

-

There is a risk that a large Deep Learning model can

silently fail.

$\Rightarrow$ How can we build confidence in the output of the neural network? -

Training these models can require a very large number of

simulations.

$\Rightarrow$ Do they bring a net computational benefit?- In case of the super-resolution model of Li et al. (2021), only 16 full-resolution $512^3$ N-body were necessary.

-

In many cases, the accuracy (not the quantity) of

simulations will be the main bottleneck.

$\Rightarrow$ What new science are these deep learning models enabling?- In the case of cosmological SBI, they do not help us resolve the uncertainty on the simulation model.

(Harrington et al. 2021)

What Would be Desirable Properties of robust ML-based Emulation

Methods?

- Make use of known symmetries and physical constraints.

- Modeling residuals to an approximate physical model

-

Minimally parametric

- Can be trained with a very small number of simulations

- Could potentially be inferred from data!

Learning effective physical laws for generating cosmological hydrodynamics with Lagrangian Deep Learning

-

The Lagrangian Deep Learning approach:

- Run an approximate Particle-Mesh DM simulation (about 10 steps)

-

Introduce a displacement $\mathbf{S}$ of particles:

\begin{equation} \mathbf{S}=-\alpha\nabla \hat{O}_{G}

f(\delta) \end{equation} where $\hat{O}_{g}$ is a

parametric Fourier-space filter, $f$ is a parametric

function of $\delta$.

$\Rightarrow$ Respects translational and rotational symmetries. - Apply a non-linear function on the resulting density field $\delta^\prime$: $$F(x) = \mathrm{ReLu}(b_1 f(\delta^\prime) - b_0)$$

$\Longrightarrow$ Only need to fit ~10 parameters to

reproduce a desired field from an hydrodynamical field.

takeaways

- Deep Learning may allow us to scale up existing simulation suites (with caveats), but it is not replacing simulation codes.

- One exciting prospect rarely explored so far in astrophysics is using Deep Learning to accelerate the N-body/hydro solver.

https://sites.google.com/view/meshgraphnets

(Pfaff et al. 2021)

Simulation-Based Cosmological Inference

Ravanbakhsh+17, Brehmer+19, Ribli+19, Pan+19, Ntampaka+19, Alexander+20, Arjona+20, Coogan+20, Escamilla- Rivera+20, Hortua+20, Vama+20, Vernardos+20, Wang+20, Mao+20, Arico+20, Villaescusa_navarro+20, Singh+20, Park+21, Modi+21, Villaescusa-Navarro+21ab, Moriwaki+21, DeRose+21, Makinen+21, Villaescusa-Navaroo+22

the forward modeling road to cosmological inference

-

Instead of trying to analytically evaluate the likelihood

$p(x | \theta)$, let us build a forward model of the

observables.

$\Longrightarrow$ The simulator becomes the physical model. - Each component of the model is now tractable, but at the cost of a large number of latent variables.

Benefits of a forward modeling approach

- Fully exploits the information content of the data (aka "full field inference").

- Easy to incorporate systematic effects.

- Easy to combine multiple cosmological probes by joint simulations.

(Schneider et al. 2015)

...so why is this not mainstream?

The Challenge of Simulation-Based Inference

$$ p(x|\theta) = \int p(x, z | \theta) dz = \int p(x | z,

\theta) p(z | \theta) dz $$ Where $z$ are

stochastic latent variables of the simulator.

$\Longrightarrow$ This marginal likelihood is intractable! Hence the phrase "Likelihood-Free Inference"

$\Longrightarrow$ This marginal likelihood is intractable! Hence the phrase "Likelihood-Free Inference"

How to do inference without evaluating the likelihood of the model?

Black-box Simulators Define Implicit Distributions

- A black-box simulator defines $p(x | \theta)$ as an implicit distribution, you can sample from it but you cannot evaluate it.

- Key Idea: Use a parametric distribution model $\mathbb{P}_\varphi$ to approximate the implicit distribution $\mathbb{P}$.

True $\mathbb{P}$

Samples $x_i \sim \mathbb{P}$

Model $\mathbb{P}_\varphi$

Conditional Density Estimation with Neural Networks

- I assume a forward model of the observations: \begin{equation} p( x ) = p(x | \theta) \ p(\theta) \nonumber \end{equation} All I ask is the ability to sample from the model, to obtain $\mathcal{D} = \{x_i, \theta_i \}_{i\in \mathbb{N}}$

- I am going to assume $q_\phi(\theta | x)$ a parametric conditional density

- Optimize the parameters $\phi$ of $q_{\phi}$ according to \begin{equation} \min\limits_{\phi} \sum\limits_{i} - \log q_{\phi}(\theta_i | x_i) \nonumber \end{equation} In the limit of large number of samples and sufficient flexibility \begin{equation} \boxed{q_{\phi^\ast}(\theta | x) \approx p(\theta | x)} \nonumber \end{equation}

$\Longrightarrow$ One can asymptotically recover the posterior

by optimizing a parametric estimator over

the Bayesian joint distribution

the Bayesian joint distribution

$\Longrightarrow$ One can asymptotically recover the posterior

by optimizing a

Deep Neural Network over

a simulated training set.

a simulated training set.

Neural Density Estimation

Bishop (1994)

Dinh et al. 2016

- Mixture Density Networks \begin{equation} p(\theta | x) = \prod_i \pi_i(x) \ \mathcal{N}\left(\mu_i(x), \ \sigma_i(x) \right) \nonumber \end{equation}

- Conditional Normalizing Flows \begin{equation} p(\theta| x) = p_z \left( z = f^{-1}(\theta, x) \right) \left| \frac{\partial f^{-1}(\theta, x)}{\partial x} \right| \end{equation}

A variety of algorithms

A few important points:

- Amortized inference methods, which estimate $p(\theta | x)$, can greatly speed up posterior estimation once trained.

- Sequential Neural Posterior/Likelihood Estimation methods can actively sample simulations needed to refine the inference.

Automated Summary Statistics Extraction

- Introduce a parametric function $f_\varphi$ to reduce the dimensionality of the data while preserving information.

Information-based loss functions

- Variational Mutual Information Maximization $$ \mathcal{L} \ = \ \mathbb{E}_{y, \theta} [ \log q_\phi(\theta | f_\varphi(x)) ] \leq I(Y; \Theta) $$

- Information Maximization Neural Network $$\mathcal{L} \ = \ - | \det \mathbf{F} | \ \mbox{with} \ \mathbf{F}_{\alpha, \beta} = tr[ \mu_{\alpha}^t C^{-1} \mu_{\beta} ] $$

Example of application: Likelihood-Free parameter inference with DES SV

Suite of N-body + raytracing simulations: $\mathcal{D}$

takeways

-

Likelihood-Free Inference automatizes inference over

numerical simulators.

- Turns both summary extraction and inference problems into an optimization problems

- Deep learning allows us to solve that problem!

-

In the context of upcoming surveys, this techniques provides

many advantages:

- Amortized inference: near instantaneous parameter inference, extremely useful for time-domain.

- Optimal information extraction: no longer need for restrictive modeling assumptions needed to obtain tractable likelihoods.

Will we be able to exploit all of the information content of

Euclid?

$\Longrightarrow$ Not rightaway, but it is not the fault of Deep

Learning!

- Deep Learning has redefined the limits of our statistical tools, creating additional demand on the accuracy of simulations far beyond the power spectrum.

- Neural compression methods have the downside of being opaque. It is much harder to detect unknown systematics.

- We will need a significant number of large volume, high resolution simulations.